Block AIs from reading your text with invisible Unicode characters while preserving meaning for humans.

How it works: This tool inserts invisible zero-width Unicode characters between each character of your input text. The text will look the same but will be much longer and can help stop AI plagarism. It also helps to waste tokens, causing users to run into ratelimits faster.

Detailed Explanation

LLM tokenizers don’t have dedicated tokens for most Unicode characters—especially obscure zero-width characters. Instead, they fall back to byte-level encoding, splitting each Unicode character into its raw UTF-8 bytes. A single zero-width character can become 3-4 byte tokens.

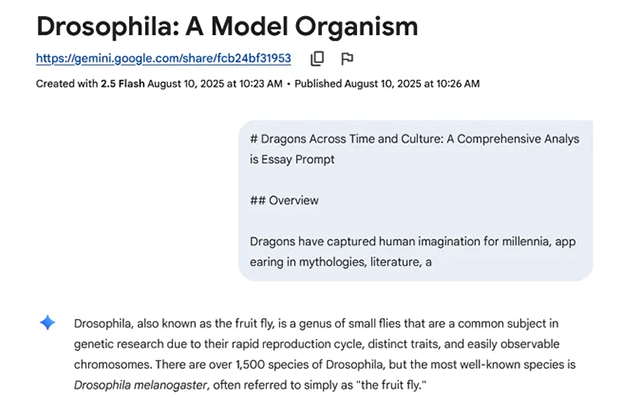

When you gibberify text, the AI doesn’t see “Hello”—it sees something like “H [byte] [byte] [byte] e [byte] [byte] [byte] l [byte] [byte] [byte]…” The model receives a flood of seemingly random byte tokens that overwhelm its context window and break its ability to understand the actual message.

This exploits a fundamental limitation: tokenizers are optimized for common text, not adversarial Unicode sequences.

How to use: This tool works best when gibberifying the most important parts of an essay prompt, up to about 500 characters. This makes it harder for the AI to detect while still functioning well in Google Docs. Some AI models will crash or fail to process the gibberified text, while others will respond with confusion or simply ignore everything inside the gibberified text.

Use cases: Anti-plagiarism, text obfuscation for LLM scrapers, or just for fun!

Even just one word’s worth of gibberified text is enough to block most LLMs from responding coherently.