We have seen plenty of impressive robotics videos over the last couple of years, but what 1X just announced feels like a fundamental shift in how these machines will actually enter our homes.

The Norwegian robotics company has revealed that its humanoid robot, NEO, can now learn and perform complex tasks simply by generating training video from a prompt. Whether it is a voice command or a text input, the robot essentially visualises the completion of a task and then executes those movements in the physical world.

Traditionally, training a robot was a painstaking process involving teleoperation, where a human would manually guide the robot through a task hundreds or thousands of times.

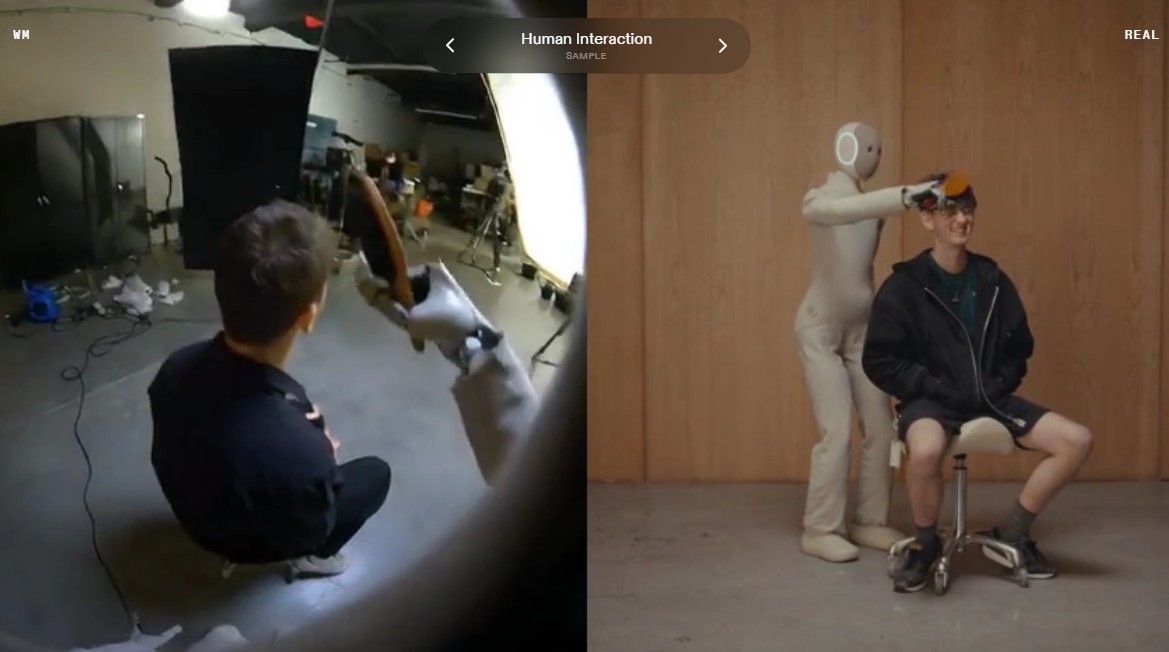

This new approach uses a world model pre-trained on internet-scale video data, allowing the robot to understand human interaction and physics without needing a specific demonstration for every single object.

“Neo can now turn any prompt into autonomous action with the latest update to the 1X world model.”

Eric Jang, VP of AI, 1X.

The significance of this cannot be overstated because it removes the data bottleneck that has long held back the robotics industry.

By using video generation as a “cognitive core,” NEO can generalise to tasks it has never seen before, such as interacting with specific household items like a toilet seat or a lunchbox.

The robot sees the environment, generates a visualization of the successful action, and then uses an inverse dynamics model to turn that pixels-to-motion dream into reality. This is a massive leap from traditional AI models for robots which are often overly sensitive to changes in lighting or the presence of random objects in the room.

Because NEO is drawing on a vast library of human knowledge from the web, it understands the context of a room and how objects are supposed to behave.

“With the world model, NEO can create unlimited data of itself doing real-world tasks, creating a flywheel that enables NEO to teach itself anything.”

Eric Jang, VP of AI, 1X.

The potential for scaling is almost limitless, as the robot is no longer restricted to the specific library of tasks its creators had time to film.

In theory, if a human can think of a task and describe it, the robot can now attempt to perform it by “hallucinating” the correct physical sequence first. However, as exciting as this “dream to act” capability is, we need to balance the hype with some of the practical realities of current generation hardware and software.

The most immediate hurdle is time, as generating high-quality video data that is grounded in physics is not an instantaneous process. In the demonstrations, we see the robot pause to process the prompt and generate the internal video sequence before the first motor starts to turn.

For simple tasks like picking up an orange, a few seconds of “thinking” time is acceptable, but for time-sensitive applications, this latency could be a dealbreaker. There is also the very real cost of compute to consider, as running these massive world models requires significant inference power.

Just like every query to an LLM like ChatGPT or Gemini consumes tokens and costs money, every “visualisation” NEO performs represents a cost in silicon and electricity.

At inference time, the system receives a text prompt and a starting frame. The WM rolls out the intended future, the IDM extracts the necessary trajectory, and the robot executes the sequence in the real world.

If every single action requires a fresh generation from a world model, the operational costs of a home robot could become a monthly subscription headache for early adopters.

Currently, 1X is working on increasing the length and success rate of these generated abilities to make them more reliable for everyday use. We are still in the early days of this technology, and while the video shows NEO handling a toilet seat and a lunchbox, the real world is much messier than a lab.

Despite these challenges, the idea of a robot that grows in capability alongside the rapidly improving world of generative AI video models is compelling.

As models like Sora or Kling become more sophisticated at understanding 3D space, robots like NEO will naturally become more capable without needing new hardware. This creates a self-improving loop where the robot generates its own training data, learns from its attempts, and refines its physical performance over time.

For the Australian market, where we are often early adopters of smart home tech, the prospect of a truly general-purpose assistant feels closer than ever. While we do not have a confirmed local price for the NEO Beta, 1X has previously aimed to make their robots affordable for the mass market in the long term.

To put it in perspective, high-end humanoid research platforms can cost hundreds of thousands of dollars, but the goal for consumer units is to eventually reach the price of a small car.

If we look at similar tech in the space, we could expect a premium humanoid to sit somewhere around the A$30,000 to A$50,000 mark initially, though that is purely speculative. The “flywheel” effect mentioned by the 1X team is the most promising part of this announcement for anyone who wants a robot that can actually do the dishes.

If the robot can teach itself through simulation and video generation, the speed at which it adds new skills to its repertoire will be exponential. We are moving away from “if-then” programming and into an era where we simply tell a machine what we want and let it figure out the “how.”

It is a paradigm shift that feels like the “iPhone moment” for robotics, even if we are still waiting for the apps to be written. The 1X world model represents a future where the physical world is just another prompt-to-output interface for AI.

As we watch NEO navigate these tasks, it is clear that the barrier between digital intelligence and physical labor is finally starting to dissolve. There is a lot of work left to do regarding battery life, motor durability, and the sheer speed of inference, but the foundation has been laid.

The ability to generalise to any task with any object is the “Holy Grail” of robotics, and 1X seems to have found a very clever shortcut.

It is a fascinating time to watch this space, especially as we see how these “world models” handle the unpredictability of human environments.

For more information, head to https://www.1x.tech/discover/world-model-self-learning