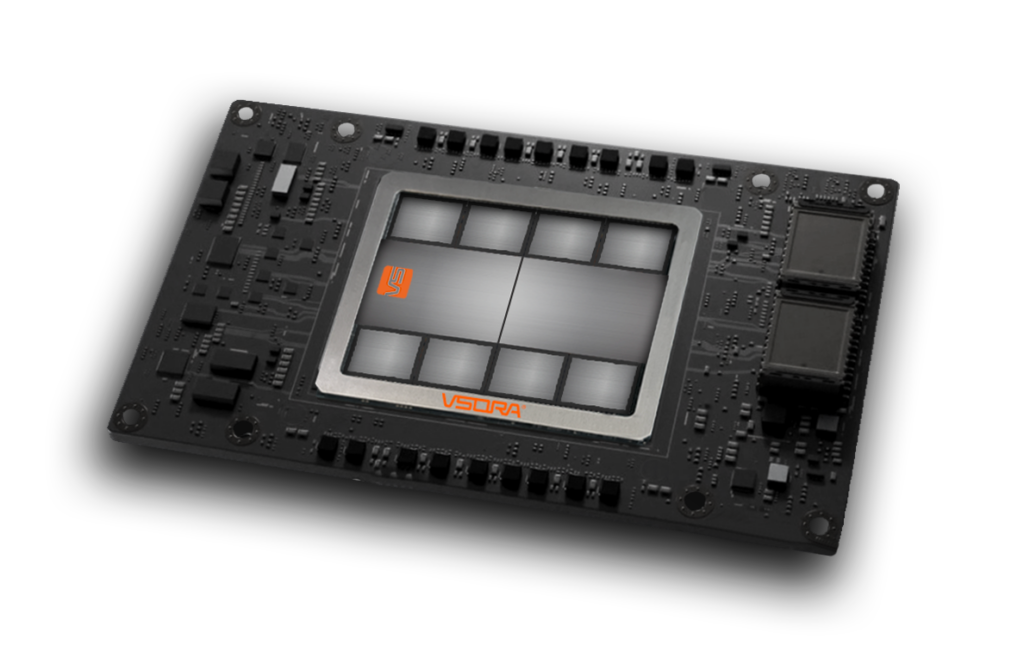

In the world of AI data centers, speed, efficiency, and scale aren’t optional—they’re everything. Jotunn8, our ultra-high-performance inference chip is built to deploy trained models with lightning-fast throughput, minimal cost, and maximum scalability. Designed around what matters most—performance, cost-efficiency, and sustainability—they deliver the power to run AI at scale, without compromise!

Why it matters: Critical for real-time applications like chatbots, fraud detection, and search.

Reasoning models, Generative AI and Agentic AI are increasingly being combined to build more capable and reliable systems. Generative AI provide flexibility and language fluency. Reasoning models provide rigor and correctness. Agentic frameworks provide autonomy and decision-making. The VSORA architecture enables smooth and easy integration of these algorithms, providing near-theory performance.

Why it matters: AI inference is often run at massive scale – reducing cost per inference is essential for business viability.